Cosmos is capable of generating tokens for each avatar’s movement, functioning as time stamps that will label brain data. This labeling process allows an AI model to accurately interpret and translate brain signals into the desired actions.

All this data will be utilized to train a foundational brain model, which is a large, deep-learning neural network adaptable for various applications without requiring separate training for each task.

“As we accumulate more data, these foundational models improve and become more versatile,” states Shanechi. “The challenge is that these models need a substantial amount of data to truly serve as foundational.” Achieving this is complicated with invasive technology that most individuals are unlikely to adopt, she adds.

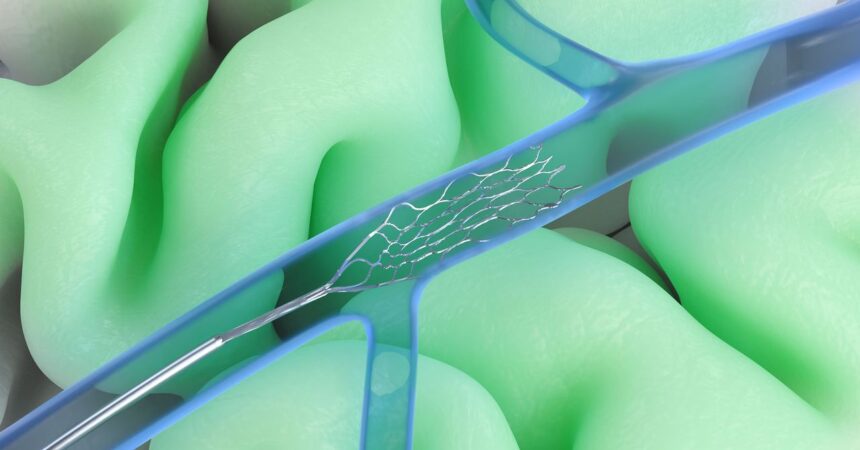

Synchron’s device is designed to be less invasive compared to many competitors. Unlike Neuralink’s arrays that are implanted directly into the brain or placed on its surface, Synchron’s mesh tube is inserted at the base of the neck and threaded through a vein to monitor activity from the motor cortex. This procedure is akin to implanting a stent in an artery and does not necessitate brain surgery.

“The significant advantage here is that we have performed millions of stents globally. Across the world, there’s ample expertise to carry out stent procedures. Any standard cath lab can perform this. Hence, it’s a scalable process,” remarks Vinod Khosla, founder of Khosla Ventures, one of Synchron’s backers. Annually, nearly 2 million individuals in the United States receive stents to open their coronary arteries and combat heart disease.

Since 2019, Synchron has implanted its brain-computer interface (BCI) in 10 subjects and has amassed several years of brain data. The company is preparing to initiate a larger clinical trial, which is essential for obtaining commercial approval for its device. There has yet to be any large-scale trials of implanted BCIs due to the inherent risks of brain surgery and the technology’s complexity and cost.

Synchron’s aspiration to develop cognitive AI is a bold endeavor, accompanied by inherent risks.

“What I envision this technology facilitating in the near term is increased control over one’s environment,” explains Nita Farahany, a professor of law and philosophy at Duke University, who has extensively studied the ethics surrounding BCIs. In the long term, Farahany anticipates that as AI models evolve, they might progress from understanding explicit commands to predicting or suggesting actions that users might want to take using their BCIs.

“To grant individuals that seamless integration or autonomy over their surroundings, it necessitates decoding not just intended speech or voluntary motor commands, but also earlier indicators of intent,” she notes.

This situation delves into complex issues regarding user autonomy and whether the AI aligns with an individual’s desires. It raises concerns about the potential for a BCI to alter someone’s perceptions, thoughts, or intentions.

Oxley points out that these concerns are already evident with generative AI. For instance, using tools like ChatGPT for content generation complicates the distinction between what a person authors and what AI produces. “I don’t believe this issue is unique to BCIs,” he remarks.

For individuals with functional hands and voices, adjusting AI-created content—similar to correcting autocorrect mistakes on a phone—poses little challenge. However, what if a BCI produces actions that a user did not intend? “Ultimately, the user will always guide the outcome,” asserts Oxley. He acknowledges, however, the necessity for a mechanism that would enable humans to override AI-generated suggestions: “There will always need to be a kill switch.”